Harbor Registry - Automating LDAP/S Configuration - Part 1

The Harbor Registry is involved in many of my Kubernetes implementations in the field, and in almost every implementation I am asked about the options to configure LDAP/S authentication for the registry. Unfortuntely, neither the community Helm chart nor the Tanzu Harbor package provides native inputs for this setup. Fortunately, the Harbor REST API enables LDAP configuration programmatically. Automating this process ensures consistency across environments, faster deployments, and reduced chances of human error.

This post, the first in a two-part series, focuses on automating LDAP configuration using Ansible, running externally from the Kubernetes cluster hosting Harbor. In the next post, we’ll explore a different approach using Terraform to run the automation within the Kubernetes cluster.

Prerequisites

- A running Harbor Registry instance deployed on Kubernetes.

- Administrator credentials for Harbor.

- API access to the Harbor instance from the machine or server running Ansible.

LDAPS Clarification

Before we get to the automation, there is one important clarification I would like to make - if you will be configuring LDAPS (secure LDAP), Harbor (specifically the harbor-core pod) must trust your LDAPS certificate. Here is a reference for achieving this:

If you are using the goharbor/harbor-helm community Helm chart, include the caBundleSecretName parameter in your values.yaml file and set it to a secret name containing your CA certificate(s). The secret containing the CA certificate should be similar to the following:

apiVersion: v1

kind: Secret

metadata:

name: ca-cert

namespace: harbor

stringData:

ca.crt: |

-----BEGIN CERTIFICATE-----

MIIDojCCAoqgAwIBAgIQSQ5hCqC2GqhFn+ZNGqdPkTANBgkqhkiG9w0BAQsFADBP

MRQwEgYKCZImiZPyLGQBGRYEZGVtbzEXMBUGCgmSJomT8ixkARkWB3RlcmFza3kx

HjAcBgNVBAMTFXRlcmFza3ktREVNTy1EQy0wMS1DQTAgFw0yMjA2MjQxMDIzMjla

GA8yMDcyMDYyMzEw....

-----END CERTIFICATE-----

And the values.yaml file would be similar to the following:

# Secret containing the CA certificate(s) for LDAPS

caBundleSecretName: ca-cert

# Admin password

harborAdminPassword: SomeP@ssw0rd!

...<redacted>

I included a complete example of the above in the GitHub repository under the harbor-ldaps-references/harbor-community-helm-chart folder.

If you are using the Harbor Tanzu package, you would have to implement an YTT overlay to achieve this:

#@ load("@ytt:overlay", "overlay")

#@overlay/match by=overlay.subset({"kind": "Deployment", "metadata":{"name":"harbor-core"}}),expects=1

---

spec:

template:

spec:

containers:

#@overlay/match by="name"

- name: core

volumeMounts:

#@overlay/append

- mountPath: /etc/harbor/ssl/ldaps

name: ldaps-cert

readOnly: true

volumes:

#@overlay/append

- name: ldaps-cert

secret:

secretName: harbor-ldaps-cert

defaultMode: 420

---

apiVersion: v1

kind: Secret

metadata:

name: harbor-ldaps-cert

namespace: tanzu-system-registry

type: Opaque

stringData:

ca.crt: |

-----BEGIN CERTIFICATE-----

MIIDojCCAoqgAwIBAgIQSQ5hCqC2GqhFn+ZNGqdPkTANBgkqhkiG9w0BAQsFADBP

MRQwEgYKCZImiZPyLGQBGRYEZGVtbzEXMBUGCgmSJomT8ixkARkWB3RlcmFza3kx

HjAcBgNVBAMTFXRlcmFza3ktREVNTy1EQy0wMS1DQTAgFw0yMjA2MjQxMDIzMjla

GA8yMDcyMDYyMzEw....

-----END CERTIFICATE-----

I included a complete example of the above in the GitHub repository under the harbor-ldaps-references/harbor-tanzu-package folder.

And to include the YTT overlay in your Harbor package deployment:

tanzu package install harbor \

--package harbor.tanzu.vmware.com \

--version "$HARBOR_PACKAGE_VERSION" \

--values-file harbor-data-values.yaml \

--ytt-overlay-file ldaps-cert-overlay.yaml \

--namespace tkg-packages

Setup and Deployment

My GitHub repository contains an Ansible role that can be used to configure LDAP/S for Harbor. Upon configuring, it also verifies the connection between Harbor and the LDAP server.

Clone the repository and cd into ansible/harbor-ldap-config.

git clone https://github.com/itaytalmi/harbor-registry-ldap.git

cd ansible/harbor-ldap-config

Review the vars.yml file and update your configuration. I all of the parameters are quite self-explanatory. For example:

# Harbor authentication

harbor_hostname: harbor.cloudnativeapps.cloud

harbor_username: admin

harbor_password: Kubernetes1!

# LDAP configuration

ldap_url: ldaps://cloudnativeapps.cloud:636

ldap_search_dn: CN=k8s-ldaps,OU=ServiceAccounts,OU=cloudnativeapps,DC=cloudnativeapps,DC=cloud

ldap_search_password: Kubernetes1!

ldap_base_dn: DC=cloudnativeapps,DC=cloud

ldap_filter: objectclass=person

ldap_uid: sAMAccountName

ldap_scope: 2 # Subtree

ldap_group_base_dn: DC=cloudnativeapps,DC=cloud

ldap_group_search_filter: objectclass=group

ldap_group_attribute_name: sAMAccountName

ldap_group_admin_dn: CN=harbor-admins,OU=Groups,OU=cloudnativeapps,DC=cloudnativeapps,DC=cloud

ldap_group_membership_attribute: memberof

ldap_group_search_scope: 2 # Subtree

ldap_verify_cert: true

Note that if you are using LDAPS and Harbor does not trust your LDAPS certificate, you can either follow the steps I provided above to add the certificate to the Harbor pod’s trust store, or set the ldap_verify_cert in your vars.yml file to false. Also, if you do not have LDAPS enabled at all on your LDAP server, set ldap_url to the appropriate URL (for LDAP, the URL would typically be ldap://your-ldap-server:389.)

You can also refer to the Configure LDAP/Active Directory Authentication page on the official documentation for more information on the required configuration.

To run the Playbook:

ansible-playbook playbook.yml

Example output:

PLAY [Configure Harbor LDAP] **************************************************************************************************************************************

TASK [harbor-ldap-config : Set Fact - Harbor LDAP Configuration JSON] *********************************************************************************************

ok: [localhost]

TASK [harbor-ldap-config : Print Harbor LDAP Desired Configuration JSON] ******************************************************************************************

ok: [localhost] => {

"harbor_ldap_config_json": {

"auth_mode": "ldap_auth",

"ldap_base_dn": "DC=cloudnativeapps,DC=cloud",

"ldap_filter": "objectclass=person",

"ldap_group_admin_dn": "CN=harbor-admins,OU=Groups,OU=cloudnativeapps,DC=cloudnativeapps,DC=cloud",

"ldap_group_attribute_name": "sAMAccountName",

"ldap_group_base_dn": "DC=cloudnativeapps,DC=cloud",

"ldap_group_membership_attribute": "memberof",

"ldap_group_search_filter": "objectclass=group",

"ldap_group_search_scope": 2,

"ldap_scope": 2,

"ldap_search_dn": "CN=k8s-ldaps,OU=ServiceAccounts,OU=cloudnativeapps,DC=cloudnativeapps,DC=cloud",

"ldap_search_password": "Kubernetes1!",

"ldap_uid": "sAMAccountName",

"ldap_url": "ldaps://cloudnativeapps.cloud:636",

"ldap_verify_cert": true

}

}

TASK [harbor-ldap-config : Configure Harbor LDAP Authentication] **************************************************************************************************

ok: [localhost]

TASK [harbor-ldap-config : Test Harbor LDAP Connection] ***********************************************************************************************************

ok: [localhost]

TASK [harbor-ldap-config : Print Test Harbor LDAP Connection Results] *********************************************************************************************

ok: [localhost] => {

"harbor_ldap_test_result.json": {

"success": true

}

}

PLAY RECAP ********************************************************************************************************************************************************

localhost : ok=5 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

As you can see, the Ansible role outputs the desired configuration before applying it (very useful for troubleshooting), and tests the connection between Harbor and LDAP. If you have the following entry in the output, you are all set:

"harbor_ldap_test_result.json": {

"success": true

}

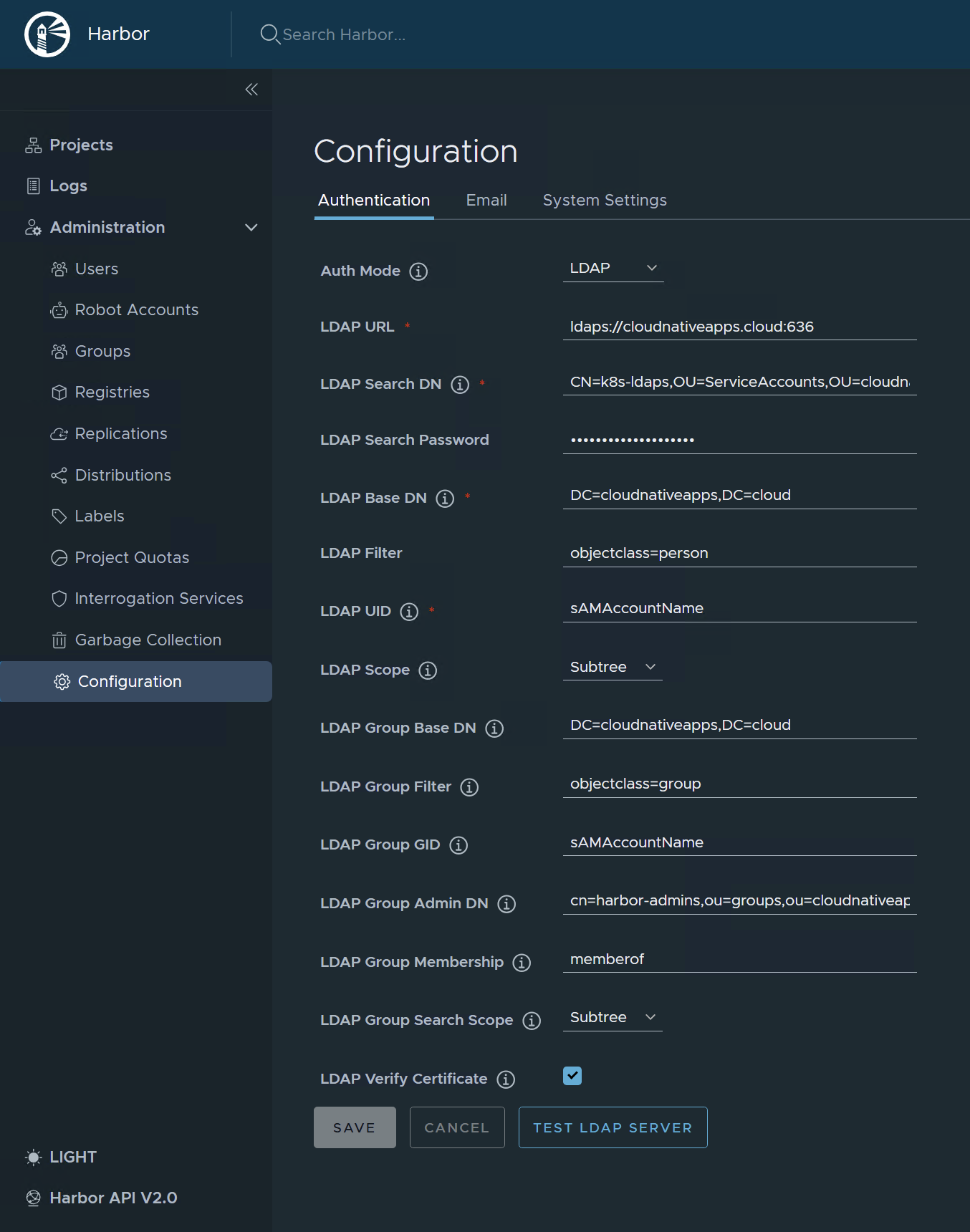

In the Harbor UI, you can also see this configuration under Configuration -> Authentication:

You should now be able to authenticate to Harbor using an LDAP user.

Wrap Up

This approach can be used as an easy way to configure Harbor in an orchestrated manner as part of a pipeline.

In the next post, we’ll take a different approach using a Terraform-based job within Kubernetes itself, for more restricted environments.