Is your TKG cluster name too long, or is it your DHCP Server…?

Recently, when working on a TKGm implementation project, I initially ran into an issue that seemed very odd, as I hadn’t encountered such behavior in any other implementation before.

The issue was that a workload cluster deployment hung after deploying the first control plane node. Until then, everything seemed just fine; as the cluster deployment had successfully initialized, NSX ALB had successfully allocated a control plane VIP. After that, however, the deployment had completely hung and seemed like it wouldn’t proceed.

I started investigating this issue by connecting directly to the control plane node via SSH, as I wanted to check if the cluster had initialized (kubeadm init). However, I immediately observed it had not.

$ ssh [email protected]

$ sudo -i

$ export KUBECONFIG=/etc/kubernetes/admin.conf

$ kubectl get nodes

No resources found

There were also no hints whatsoever in the deployment output. It simply hung.

$ tanzu cluster create -f tkg-wld-cls.yaml

Validating configuration...

Creating workload cluster 'just-a-really-long-cluster-name'...

Waiting for cluster to be initialized...

cluster control plane is still being initialized: WaitingForControlPlane

cluster control plane is still being initialized: ScalingUp

When I realized the cluster could not even initialize, I thought looking at the cloud-init logs would be a good idea, as cloud-init is responsible for kubeadm init.

And sure enough, I observed the control plane node name was too long.

$ less /var/log/cloud-init-output.log

...

[2022-06-18 19:04:20] hostname: name too long

[2022-06-18 19:04:20] /usr/sbin/update-ca-certificates

[2022-06-18 19:04:20] Updating certificates in /etc/ssl/certs...

[2022-06-18 19:04:21] 1 added, 0 removed; done.

[2022-06-18 19:04:21] Running hooks in /etc/ca-certificates/update.d...

[2022-06-18 19:04:21] done.

[2022-06-18 19:04:21] [init] Using Kubernetes version: v1.22.9+vmware.1

[2022-06-18 19:04:21] [preflight] Running pre-flight checks

[2022-06-18 19:04:22] [WARNING Hostname]: invalid node name format "just-a-really-long-cluster-name-control-plane-xgfcf.my.really.long.domain.nam

e": name part must be no more than 63 characters

At first, it didn’t make any sense to me, as I had already used long cluster names before (in fact, I had used the same cluster name in many other environments and never ran into this issue).

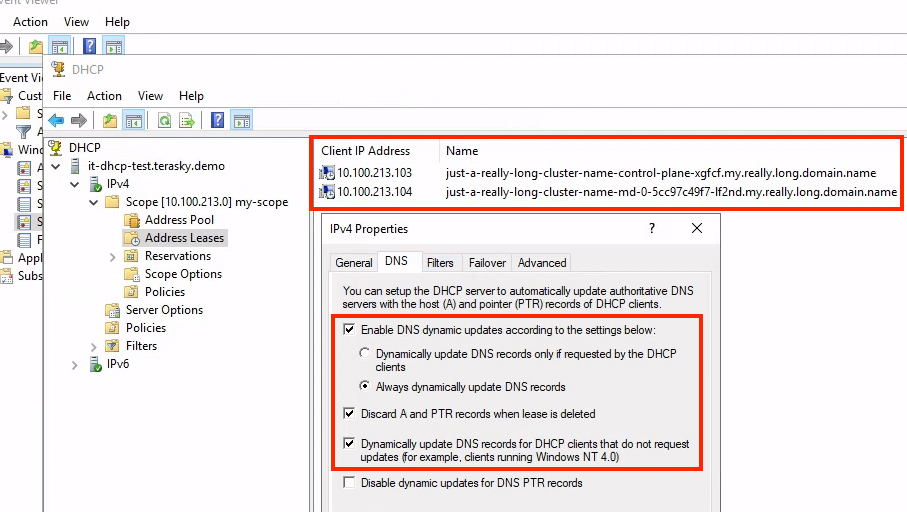

After further investigation, it turned out that in this particular environment, the DHCP server (which is Microsoft-based) was configured to register all of its clients in DNS. This means that when the node OS composes its hostname, it uses its resolvable FQDN rather than just the short name.

While Cluster API performs several validations and pre-checks before the deployment, some things are purely OS-based (or environmental) that Cluster API can’t predict as part of its pre-checks.

Once I figured it out, I shortened the cluster name so that the resulting node name would be shorter than 63 characters. As the cluster initialized, I immediately noticed that the node joined the cluster using its FQDN, but this time. The cluster creation was successful.

$ ssh [email protected]

$ sudo -i

$ export KUBECONFIG=/etc/kubernetes/admin.conf

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

a-shorter-name-control-plane-4cd46.my.really.long.domain.name Ready control-plane,master 56s v1.22.9+vmware.1

a-shorter-name-control-plane-w5plq.my.really.long.domain.name Ready control-plane,master 5m43s v1.22.9+vmware.1

a-shorter-name-md-0-5455c4fc5b-bpq2p.my.really.long.domain.name Ready <none> 4m27s v1.22.9+vmware.1

Validating configuration...

Creating workload cluster 'a-shorter-name'...

Waiting for cluster to be initialized...

cluster control plane is still being initialized: WaitingForControlPlane

cluster control plane is still being initialized: ScalingUp

Waiting for cluster nodes to be available...

Waiting for cluster autoscaler to be available...

Waiting for addons installation...

Waiting for packages to be up and running...

Workload cluster 'a-shorter-name' created

Unfortunately, in this particular environment, the DNS registration by DHCP is an environmental constraint that cannot be changed.

While this is not a Cluster API or TKG issue, it would be great to have better logging, indicating something is wrong with the deployment. Hopefully, that will improve in future releases.

Lessons learned:

- Make sure your node names don’t exceed 63 characters. Consider that when choosing a name for your cluster if your DHCP server registers its clients in DNS.

- If your DHCP server registers its clients in DNS, it is strongly recommended that you find a way to disable that registration for your Kubernetes nodes if you can.

- If your domain name contains

cluster.local, that may lead to even more significant issues. - If your cluster fails to initialize, look at the cloud-init logs on the control plane node (

/var/log/cloud-init-output.log). Since cloud-init is responsible for executingkubeadm init, that’s where you should start investigating.

*In case you’re wondering, the above logs and screenshots were taken from my lab. I had to reproduce this issue somewhere to compose this post. :)