HashiCorp Vault Intermediate CA Setup with Cert-Manager and Microsoft Root CA

In this post, we’ll explore how to set up HashiCorp Vault as an Intermediate Certificate Authority (CA) on a Kubernetes cluster, using a Microsoft CA as the Root CA. We’ll then integrate this setup with cert-manager, a powerful Kubernetes add-on for automating the management and issuance of TLS certificates.

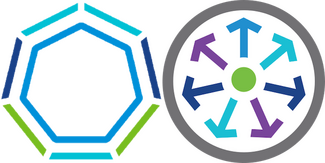

The following is an architecture diagram for the use case I’ve built.

- A Microsoft Windows server is used as the Root CA of the environment.

- A Kubernetes cluster hosting shared/common services, including HashiCorp Vault. This is a cluster that can serve many other purposes/solutions, consumed by other clusters. The Vault server is deployed on this cluster and serves as an intermediate CA server, under the Microsoft Root CA server.

- A second Kubernetes cluster hosting the application(s). Cert-Manager is deployed on this cluster, integrated with Vault, and handles the management and issuance of TLS certificates against Vault using the ClusterIssuer resource. A web application, exposed via ingress, is running on this cluster. The ingress resource consumes its TLS certificate from Vault.

Prerequisites

- Atleast one running Kubernetes cluster. To follow along, you will need two Kubernetes clusters, one serving as the shared services cluster and the other as the workload/application cluster.

- Access to a Microsoft Root Certificate Authority (CA).

- The Helm CLI installed.

- Clone my GitHub repository. This repository contains all involved manifests, files and configurations needed.

Setting Up HashiCorp Vault as Intermediate CA

Deploy Initialize and Configure Vault

Install the Vault CLI. In the following example, Linux Ubuntu is used. If you are using a different operating system, refer to these instructions.

Continue reading